My First Object Classification Model: That Ocean Trash Identifier

Do you have a similar experience?

If you also enjoy swimming or paddling in the sea like me, are you sometimes confused or angered when you think you spot a plastic bag floating in the water from afar? Yet when you paddle closer and a just moment before you try to pick it up, you suddenly realise it’s a jellyfish. Oooops… good that you haven’t touched it, otherwise you could have been stung.

A situation like this can be tricky even to human sometimes. I wondered how good a machine using Deep Learning algorithm could handle this problem.

The Current Challenge

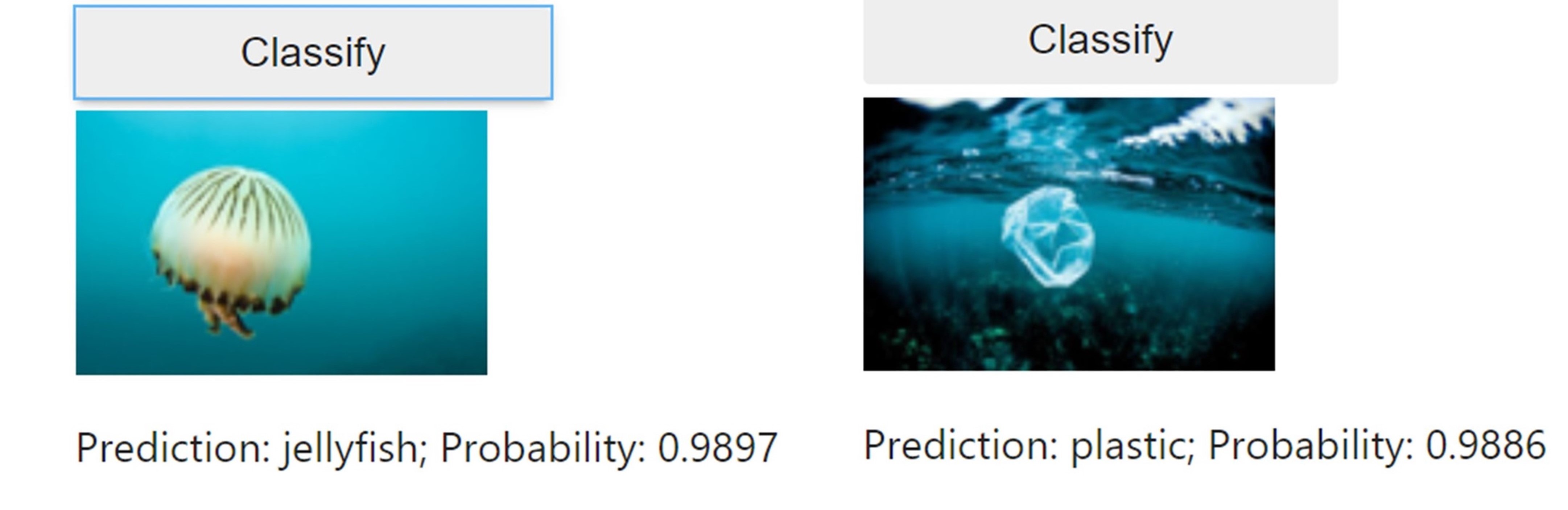

Following the FastAI approach1, I trained a classifier that would distinguish between jellyfish and plastic bags in the sea. (In a future post I will talk about the procedures involved in creating the classifier.)

The results were astounding! The object identifier worked pretty well with previously unseen images.

Here are the outcomes:

“That Ocean Trash Identifier!” – Give it a try yourself?

(Note: The app runs on Binder - a free service that provides hardware and software to run your codes online. It may take a bit of time to load the app when you first visit the page.)

An extension of this project can be working on models that feed on motion pictures - so next time when you are actually out paddling, it advises you whether the floating object afar is a plastic bag or a jellyfish before you pick it up.

Woman, Man, Camera, TV

Remember this video? When the current President of the United States of America claimed that he was ‘cognitively there’ and he aced a ‘difficult’ cognitive test which required him to memorise items like Person, Woman, Man, Camera, TV.

Simply for fun, I have also built a classifier for identifying Woman, Man, Camera, TV.

(Kudos to JiangHong who came up with this idea originally – such a genius! I cracked up so much when I first came across this post.)

And here is my attempt: “A Woman-Man-Camera-TV Identifier”!

My Thoughts, so far

Overall, FastAI library is very user friendly. It is easy to use and understand.

I always thought you needed loads of data to train a good model. FastAI shows that it is not necessarily the case. Given the access to the endless amount of images online, I only needed less than 150 pictures for each object type (plastic bag / jelly fish) at the end to get an almost-perfect classifier.

Data cleaning is an important process. Model predictions are depedent and influenced by training data. (This point will be further discussed in the last section.) From my experience, the act of cleaning the data is still very labor intensive. I would want to know more how companies tackle that issue in real-life practice.

Caveats about these classifiers

Deep Learning is not everything. It certainly has limitations.

-

Predictions can be largely influenced by the training data. That is, what can be predicted is constrained by the data you put into the model to train.

-

In other words, the model can be biased. When I started developing the ‘Woman-Man-Camera-TV’ classifier, I realised it constantly mis-identified men wearing caps /head accessories, or women with skin head … So at the very beginning I had to go back and fro to check to make sure the image set would comprise examples that represent a good diversity of people. (See also the chapter on Data Ethics in FastAI2.

-

This is a very simple classifier I built. It treats a whole image as a single entity. So, if you have both plastic bag and jelly fish in the same picture, the classifier may struggle to tell you which one exists.

It’s only a few weeks in my learning journey with FastAI. I am having so much fun already and there are a lot of ideas popping up in my mind.